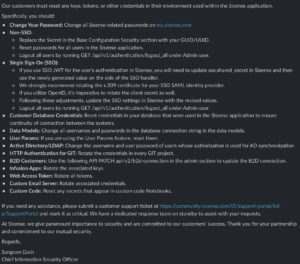

Look, we don’t yet know what really happened at Sisense. Thanks to Brian Krebs and CISA, combined with the note sent out by the CISO (bottom of this post), it’s pretty obvious the attackers got a massive trove of secrets. Just look at that list of what you have to rotate. It’s every cred you ever had, every cred you ever thought of, and the creds of your unborn children and/or grandchildren.

Brian’s article has basically one sentence that describes the breach:

Sisense declined to comment when asked about the veracity of information shared by two trusted sources with close knowledge of the breach investigation. Those sources said the breach appears to have started when the attackers somehow gained access to the company’s code repository at Gitlab, and that in that repository was a token or credential that gave the bad guys access to Sisense’s Amazon S3 buckets in the cloud.

And as to where the data ended up? Sure sounds like the dark web to me:

On April 10, Sisense Chief Information Security Officer Sangram Dash told customers the company had been made aware of reports that “certain Sisense company information may have been made available on what we have been advised is a restricted access server (not generally available on the internet.)”

So if (and that’s a very big if, this early) the first quote is correct, then here’s what probably happened:

- Someone at Sisense stored IAM user keys in GitLab. Probably in code, but that’s an open question. Could have been in the pipeline config.

- Sisense also stored a vast trove of user credentials that either were not encrypted before putting them in S3 (I’ll discuss that in a moment), or with the decryption key in the code.

- Bad guys got into GitLab.

- Bad guys (or girls, I’m not sexist) tested keys and discovered they can access S3. Maybe this was obvious because the keys were in the code to access S3, or maybe it was non-obvious and they tried good old ListBuckets.

- Attackers (is that better, people?) downloaded everything from S3 and put it on a private server or somewhere in the depths of Tor.

We don’t know the chain of events that lead to the key/credential being in GitLab. There’s also the chance it was more-advanced and the attacker stole session credentials from a runner or something and a static key wasn’t a factor. I am not going to victim blame yet — let’s see what facts emerge. The odds are decent this is more complex than what’s emerged.

HOWEVER

Some of you will go to work tomorrow, and your CEO will ask, “how can we make sure this never happens to us?” None of this is necessarily easy at scale, but here’s where to start:

- Scan all your repositories for cloud credentials. This might be as simple as turning on a feature of your platform, or you might have to use a static analysis tool or credential-specific scanner.

- If you find any, get rid of them. If you can’t immediately get rid of them, hop on over to the associated IAM policy and restrict it to the necessary source IP address.

- Don’t think S3 encryption will save you. First, it’s always encrypted. The trick is to encrypt with a key that isn’t accessible from the same identity that has access to the data. So… that’s not something most people do. Encryption is almost always incorrectly implemented to stop any threat other than a stolen hard drive.

- If you manage customer credentials, that sh** needs to be locked down. Secrets managers, dedicated IAM platforms, whatever. It’s possible in this case all the credentials weren’t in an S3 bucket and there were other phases of the attack. But if they were in an S3 bucket, that is… not a good look.

- Sensitive S3 buckets should have an IP-based resource policy on them. All the IAM identities (users/roles) with access should also have IP restrictions. Read up on the AWS Data Perimeter.

Get rid of access keys. Scan your code and repositories for credentials. Lock down where data can be accessed from.

And repeat after me,

Every cloud security failure is an IAM failure, and every IAM failure is a governance failure.

I’m really sorry for any of you using Sisense. That list isn’t going to be easy to get through.

Comments